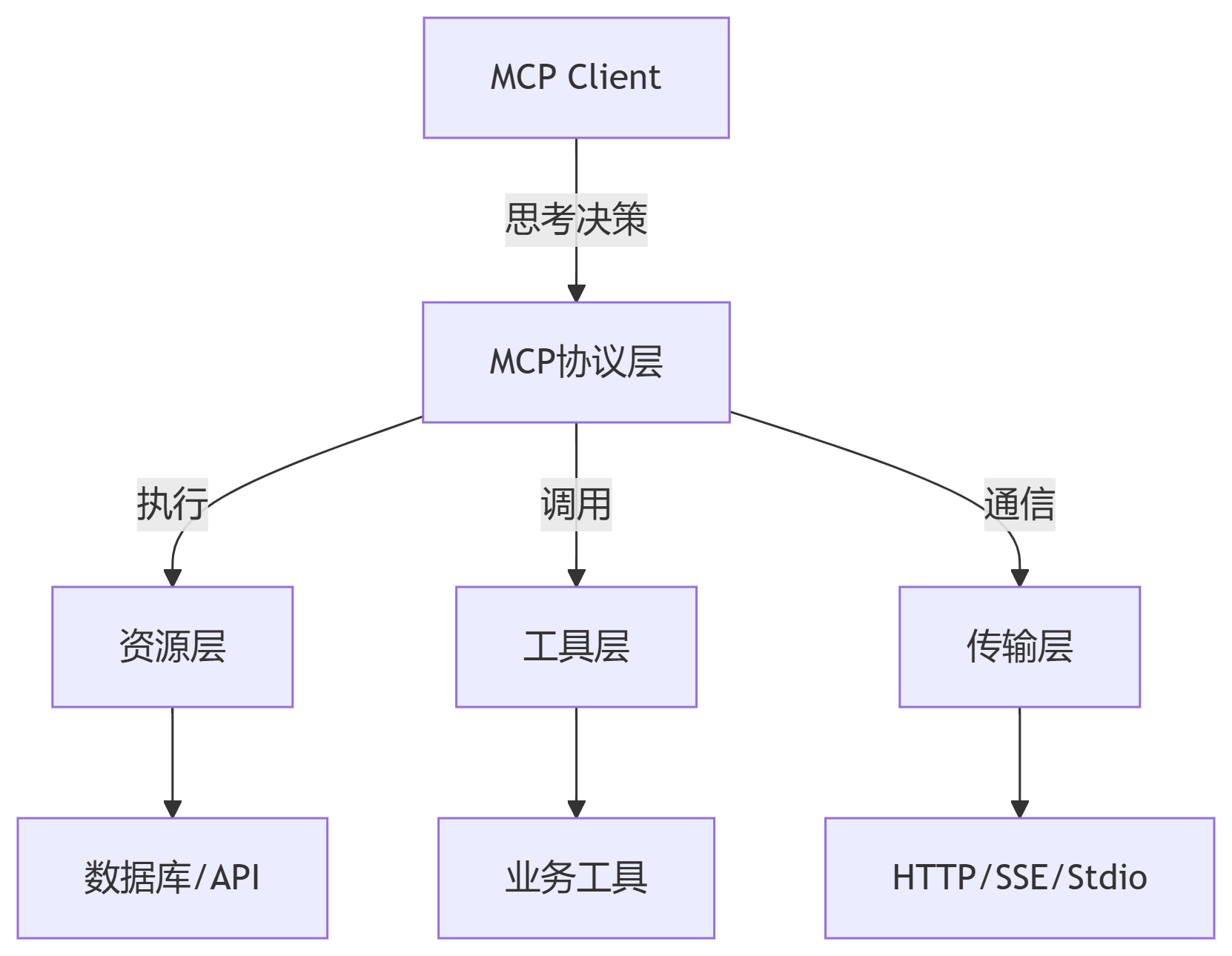

解耦设计:大模型(Client)专注推理决策,外部操作(Server)交给工具执行

动态扩展:新增工具无需修改模型代码,通过JSON-RPC标准化通信

安全管控:沙箱机制隔离敏感操作(如数据库写入)

// 请求示例

{

"jsonrpc": "2.0",

"id": "req-123",

"method": "calculate",

"params": {"a": 5, "b": 3}

}

// 响应示例

{

"jsonrpc": "2.0",

"id": "req-123",

"result": 8

}关键字段:method定义工具名,params传递参数

Streamable HTTP优势:

会话ID维持状态:Mcp-Session-Id头部实现断线重连

单端点通信:替代SSE双通道,连接数减少90%

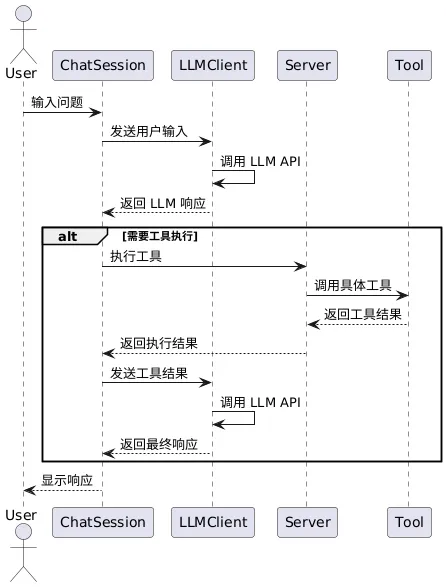

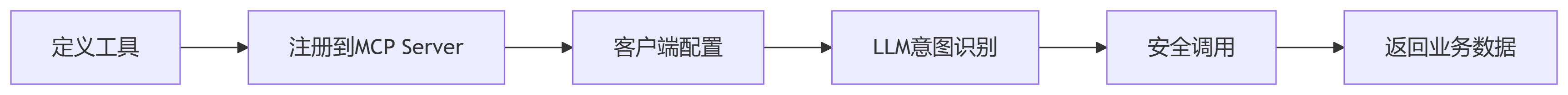

能力发现:Client启动时调用getServerCapabilities()获取工具列表

意图识别:LLM根据用户问题选择工具及参数

安全拦截:Client验证参数合法性(如过滤SQL注入)

from fastmcp import FastMCP

mcp = FastMCP("Math Server")

@mcp.tool(name="calculate") # 装饰器注册工具

def math_tool(operation: str, a: float, b: float) -> float:

"""执行数学运算,支持add/subtract/multiply/divide"""

if operation == "add": return a + b

elif operation == "subtract": return a - b

elif operation == "multiply": return a * b

elif operation == "divide": return a / b # 注意除零校验使用MCP Inspector调试工具:

npx @modelcontextprotocol/inspector node math_server.py

验证点:

参数类型校验(非数字输入拒绝)

边界测试(除零返回错误码)

@app.route('/stream', methods=['GET'])

def sse_stream():

def event_stream():

while True:

# 监听工具调用请求

result = get_tool_result()

yield f"data: {json.dumps(result)}\n\n" # SSE格式要求

return Response(event_stream(), mimetype="text/event-stream")const eventSource = new EventSource('http://localhost:5000/stream');

// 监听计算结果

eventSource.addEventListener('calculate', (event) => {

const data = JSON.parse(event.data);

console.log("收到结果:", data.result);

});

// 错误处理

eventSource.onerror = (err) => {

console.error("SSE连接异常:", err);

};避坑指南:需添加会话ID防止消息串扰

@mcp.tool()

def advanced_calc(expression: str) -> float:

"""执行复杂数学表达式,支持sin/cos/log等"""

try:

return eval(expression, {"__builtins__": None}, math.__dict__)

except Exception as e:

raise ValueError(f"表达式错误:{str(e)}")2. 客户端调用逻辑

async def solve_math_problem(question: str):

async with Client(mcp) as client:

# 1. 获取可用工具

tools = await client.list_tools()

# 2. 构造LLM提示词

prompt = f"问题:{question}。可用工具:{json.dumps(tools)}"

# 3. 解析LLM返回的工具调用指令

tool_call = await llm.generate(prompt)

# 4. 执行调用

result = await client.call_tool(tool_call.name, tool_call.params)

return result[0].text # 返回文本结果统一端点管理:

mcp = FastMCP("AI Server", endpoint="/mcp") # 单端点支持POST/GET/SSE上下文共享:

@mcp.tool()

def recommend_product(user_id: str):

ctx = get_context() # 获取会话上下文

history = ctx.get("purchase_history")

return db.query(f"SELECT * FROM products WHERE category IN {history}")无缝集成OpenAPI:

from fastmcp.integrations import FastAPIPlugin fastapi_app.include_router(FastAPIPlugin(mcp).router)

# 输入消毒

def sanitize_input(sql: str) -> str:

return re.sub(r"[;\\\"]", "", sql) # 移除危险字符

# 权限分级

TOOL_PERMISSIONS = {"query_db": ["user"], "execute_payment": ["admin"]}

# 审计日志

@app.middleware("http")

async def log_requests(request: Request, call_next):

logger.info(f"{request.client} called {request.url.path}")

return await call_next(request)

源码与资源:

掌握MCP架构,你将成为大模型落地的“关键桥梁”。建议从本地工具调用起步,逐步挑战企业级集成场景!更多AI大模型应用开发学习视频内容和资料,尽在聚客AI学院。