本文全面解析Hugging Face Transformers库的核心功能,通过丰富示例和最佳实践,带你快速掌握预训练模型的加载、使用和微调技术。

# 安装核心库(推荐使用虚拟环境) pip install transformers # 安装加速库(可选但推荐) pip install accelerate # 安装PyTorch(根据CUDA版本选择) pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118 # 安装可选依赖 pip install datasets evaluate sentencepiece

import transformers

import torch

print(f"Transformers版本: {transformers.__version__}")

print(f"PyTorch版本: {torch.__version__}")

print(f"GPU可用: {'是' if torch.cuda.is_available() else '否'}")

# 输出示例:

# Transformers版本: 4.45.1

# PyTorch版本: 2.2.1+cu118

# GPU可用: 是import os # 设置模型缓存目录(避免重复下载) os.environ['TRANSFORMERS_CACHE'] = '/path/to/cache/dir' # 设置离线模式(仅使用缓存) os.environ['TRANSFORMERS_OFFLINE'] = '1'

from transformers import pipeline

# 文本分类

classifier = pipeline("text-classification")

result = classifier("Hugging Face Transformers is amazing!")

print(result)

# [{'label': 'POSITIVE', 'score': 0.9998}]

# 命名实体识别

ner = pipeline("ner", grouped_entities=True)

result = ner("My name is John and I work at Google in New York.")

print(result)

# [{'entity_group': 'PER', 'score': 0.99, 'word': 'John'},

# {'entity_group': 'ORG', 'score': 0.98, 'word': 'Google'},

# {'entity_group': 'LOC', 'score': 0.99, 'word': 'New York'}]

# 文本生成

generator = pipeline("text-generation", model="gpt2")

result = generator("In a world where", max_length=50, num_return_sequences=2)

for i, res in enumerate(result):

print(f"生成结果 {i+1}: {res['generated_text']}\n")# 中文情感分析

classifier_zh = pipeline("text-classification", model="bert-base-chinese")

result = classifier_zh("这部电影太精彩了,强烈推荐!")

print(result) # [{'label': '积极', 'score': 0.99}]

# 日语问答

qa_ja = pipeline("question-answering", model="cl-tohoku/bert-base-japanese")

context = "東京は日本の首都です。"

question = "日本の首都はどこですか?"

result = qa_ja(question=question, context=context)

print(f"回答: {result['answer']}") # 東京# 图像分类

vision_classifier = pipeline("image-classification")

result = vision_classifier("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg")

print([(res['label'], f"{res['score']:.2f}") for res in result[:3]])

# [('tiger cat', '0.43'), ('tabby', '0.23'), ('Egyptian cat', '0.20')]

# 图像描述生成

image_captioner = pipeline("image-to-text", model="Salesforce/blip-image-captioning-base")

result = image_captioner("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg")

print(f"图像描述: {result[0]['generated_text']}")

# 一只胖乎乎的猫坐在草地上from transformers import AutoTokenizer

# 加载分词器

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

# 分词示例

text = "Hugging Face Transformers is awesome!"

tokens = tokenizer.tokenize(text)

print(f"分词结果: {tokens}")

# ['hugging', 'face', 'transformers', 'is', 'awesome', '!']

# 编码为模型输入

inputs = tokenizer(text, return_tensors="pt")

print(f"编码结果: {inputs}")

# {

# 'input_ids': tensor([[101, 17662, 6161, 11303, 2003, 7073, 999, 102]]),

# 'token_type_ids': tensor([[0, 0, 0, 0, 0, 0, 0, 0]]),

# 'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 1, 1]])

# }from transformers import AutoModel

import torch

# 加载模型

model = AutoModel.from_pretrained("bert-base-uncased")

# 前向传播

with torch.no_grad():

outputs = model(**inputs)

# 输出解析

last_hidden_state = outputs.last_hidden_state

print(f"隐藏状态形状: {last_hidden_state.shape}") # torch.Size([1, 8, 768])# 文本分类

from transformers import AutoModelForSequenceClassification

cls_model = AutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased-finetuned-sst-2-english")

cls_outputs = cls_model(**inputs)

logits = cls_outputs.logits

predicted_class = torch.argmax(logits).item()

print(f"预测类别: {'积极' if predicted_class else '消极'}") # 积极

# 问答任务

from transformers import AutoModelForQuestionAnswering

qa_model = AutoModelForQuestionAnswering.from_pretrained("bert-large-uncased-whole-word-masking-finetuned-squad")

qa_inputs = tokenizer("Hugging Face is a company based in New York.",

"Where is Hugging Face based?",

return_tensors="pt")

qa_outputs = qa_model(**qa_inputs)

answer_start = torch.argmax(qa_outputs.start_logits)

answer_end = torch.argmax(qa_outputs.end_logits) + 1

answer = tokenizer.convert_tokens_to_string(

tokenizer.convert_ids_to_tokens(qa_inputs["input_ids"][0][answer_start:answer_end])

print(f"回答: {answer}") # New York# 基础英语BERT

model_bert = AutoModel.from_pretrained("bert-base-uncased")

# 中文BERT

model_bert_zh = AutoModel.from_pretrained("bert-base-chinese")

# 多语言BERT

model_bert_multi = AutoModel.from_pretrained("bert-base-multilingual-cased")

# 蒸馏BERT(轻量版)

model_distilbert = AutoModel.from_pretrained("distilbert-base-uncased")# GPT-2

model_gpt2 = AutoModelForCausalLM.from_pretrained("gpt2")

# 中文GPT

model_gpt_zh = AutoModelForCausalLM.from_pretrained("uer/gpt2-chinese-cluecorpussmall")

# GPT-Neo(GPT-3开源替代)

model_gpt_neo = AutoModelForCausalLM.from_pretrained("EleutherAI/gpt-neo-1.3B")# 文本到文本转换

model_t5 = AutoModelForSeq2SeqLM.from_pretrained("t5-small")

# 使用示例

tokenizer_t5 = AutoTokenizer.from_pretrained("t5-small")

inputs = tokenizer_t5("translate English to French: Hello, how are you?", return_tensors="pt")

outputs = model_t5.generate(**inputs)

print(tokenizer_t5.decode(outputs[0])) # <pad> Bonjour, comment allez-vous?</s># ViT图像分类

from transformers import ViTFeatureExtractor, ViTForImageClassification

import requests

from PIL import Image

feature_extractor = ViTFeatureExtractor.from_pretrained("google/vit-base-patch16-224")

model_vit = ViTForImageClassification.from_pretrained("google/vit-base-patch16-224")

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/cats.png"

image = Image.open(requests.get(url, stream=True).raw)

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model_vit(**inputs)

logits = outputs.logits

predicted_class_idx = logits.argmax(-1).item()

print(f"预测类别: {model_vit.config.id2label[predicted_class_idx]}")from datasets import load_dataset

# 加载IMDB数据集

dataset = load_dataset("imdb")

print(dataset["train"][0]) # 查看样本

# 数据集拆分

train_dataset = dataset["train"].select(range(1000)) # 小型训练集

test_dataset = dataset["test"].select(range(200)) # 小型测试集from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased")

# 分词函数

def tokenize_function(examples):

return tokenizer(

examples["text"],

padding="max_length",

truncation=True,

max_length=256

)

# 应用分词

tokenized_train = train_dataset.map(tokenize_function, batched=True)

tokenized_test = test_dataset.map(tokenize_function, batched=True)from transformers import AutoModelForSequenceClassification, TrainingArguments, Trainer

import numpy as np

from evaluate import load

# 加载模型

model = AutoModelForSequenceClassification.from_pretrained(

"distilbert-base-uncased",

num_labels=2 # 二分类

)

# 训练参数

training_args = TrainingArguments(

output_dir="./results",

evaluation_strategy="epoch",

learning_rate=2e-5,

per_device_train_batch_size=16,

per_device_eval_batch_size=16,

num_train_epochs=3,

weight_decay=0.01,

logging_dir="./logs",

)

# 评估函数

accuracy_metric = load("accuracy")

def compute_metrics(eval_pred):

logits, labels = eval_pred

predictions = np.argmax(logits, axis=-1)

return accuracy_metric.compute(predictions=predictions, references=labels)

# 创建Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_train,

eval_dataset=tokenized_test,

compute_metrics=compute_metrics,

)

# 开始训练

trainer.train()

# 评估模型

eval_results = trainer.evaluate()

print(f"评估准确率: {eval_results['eval_accuracy']:.4f}")# 创建pipeline使用微调模型

custom_pipeline = pipeline(

"text-classification",

model=model,

tokenizer=tokenizer,

device=0 if torch.cuda.is_available() else -1

)

# 测试新样本

results = custom_pipeline([

"This movie was absolutely fantastic!",

"The worst film I've ever seen in my life."

])

for res in results:

print(f"文本: {res['text']}")

print(f"情感: {'积极' if res['label'] == 'LABEL_1' else '消极'}, 置信度: {res['score']:.4f}\n")# 动态量化(推理加速)

from transformers import AutoModelForSequenceClassification

# 加载原始模型

model = AutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased")

# 应用动态量化

quantized_model = torch.quantization.quantize_dynamic(

model,

{torch.nn.Linear},

dtype=torch.qint8

)

# 保存量化模型

quantized_model.save_pretrained("./quantized_model")

from huggingface_hub import notebook_login

from transformers import AutoModel, AutoTokenizer

# 登录Hugging Face账号

notebook_login()

# 加载模型

model = AutoModel.from_pretrained("bert-base-uncased")

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

# 微调模型(示例)

# ... 微调代码 ...

# 保存到本地

model.save_pretrained("./my_finetuned_bert")

tokenizer.save_pretrained("./my_finetuned_bert")

# 上传到Hugging Face Hub

model.push_to_hub("my-username/my-finetuned-bert")

tokenizer.push_to_hub("my-username/my-finetuned-bert")from transformers import BertConfig, BertModel # 自定义BERT配置 config = BertConfig( vocab_size=52000, # 自定义词汇表大小 hidden_size=768, num_hidden_layers=8, # 减少层数 num_attention_heads=8, intermediate_size=3072, max_position_embeddings=256, # 减少最大长度 ) # 从配置初始化模型 custom_model = BertModel(config) # 查看模型结构 print(custom_model)

Pipeline快速使用:

# 三行代码实现文本分类

classifier = pipeline("text-classification")

result = classifier("Awesome product!")

print(result[0]['label']) # POSITIVE/NEGATIVEAutoClass加载模式:

# 灵活加载模型和分词器

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

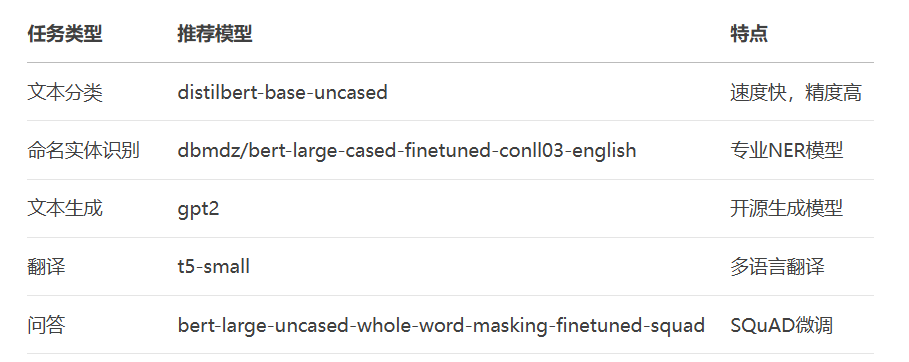

model = AutoModelForSequenceClassification.from_pretrained("bert-base-uncased")模型选择指南:

微调最佳实践:

# 使用Trainer简化训练 trainer = Trainer( model=model, args=TrainingArguments(per_device_train_batch_size=16, learning_rate=2e-5), train_dataset=dataset, compute_metrics=compute_metrics ) trainer.train()

通过掌握Hugging Face Transformers库,你将能够快速实现各种NLP和计算机视觉任务,为构建真实世界的AI应用提供强大支持!更多AI大模型应用开发学习视频内容和资料,尽在聚客AI学院。