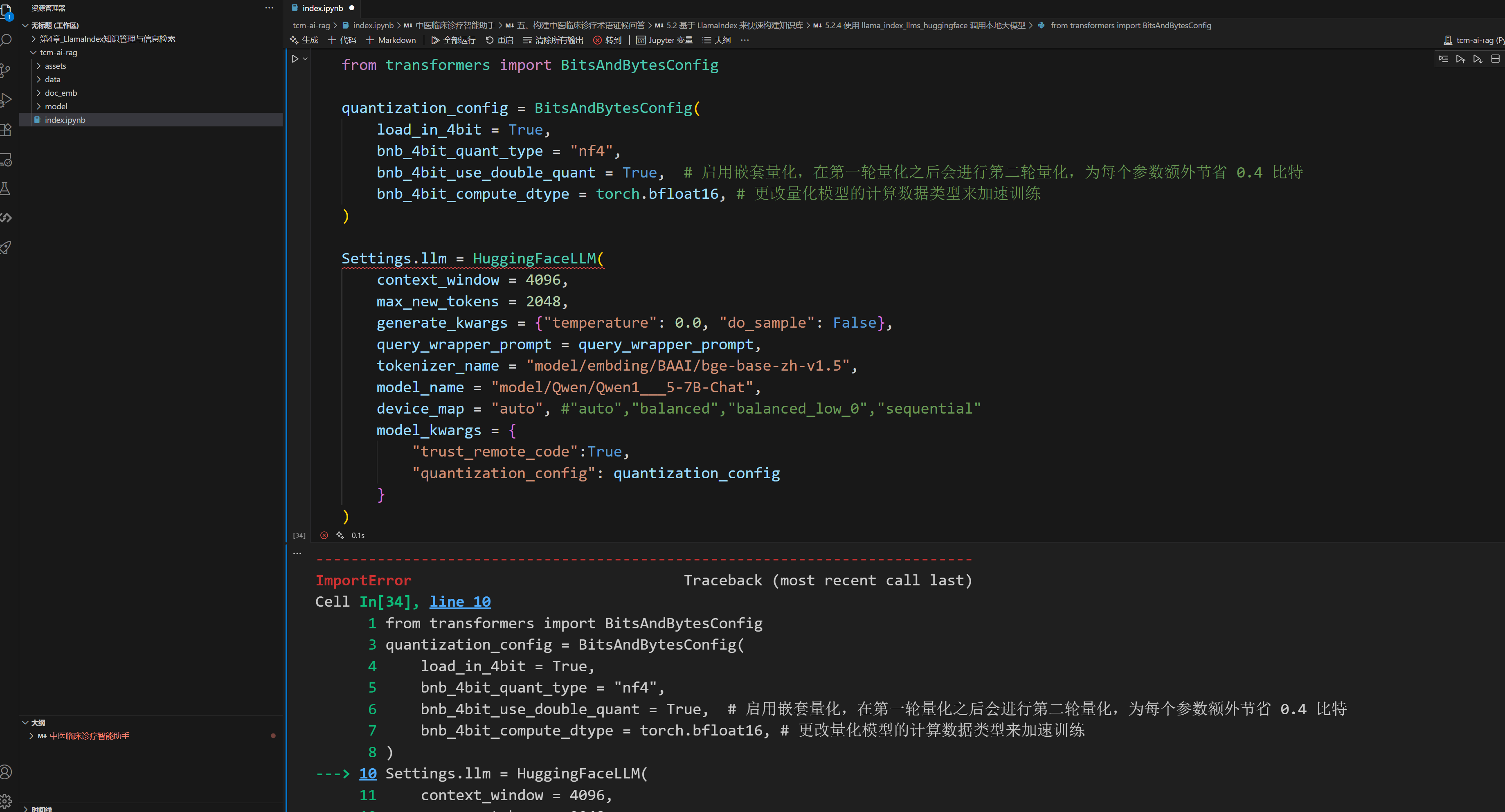

在调用本地大模型代码,系统报错:让安装或更新 bitsandbytes 库、 accelerate 库、还提示torch不匹配,将bitsandbytes 、accelerate 和torch全部重新安装,并更新至最新版,仍然报以下错误:

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

Cell In[35], line 10

1 from transformers import BitsAndBytesConfig

3 quantization_config = BitsAndBytesConfig(

4 load_in_4bit = True,

5 bnb_4bit_quant_type = "nf4",

6 bnb_4bit_use_double_quant = True, # 启用嵌套量化,在第一轮量化之后会进行第二轮量化,为每个参数额外节省 0.4 比特

7 bnb_4bit_compute_dtype = torch.bfloat16, # 更改量化模型的计算数据类型来加速训练

8 )

---> 10 Settings.llm = HuggingFaceLLM(

11 context_window = 4096,

12 max_new_tokens = 2048,

13 generate_kwargs = {"temperature": 0.0, "do_sample": False},

14 query_wrapper_prompt = query_wrapper_prompt,

15 tokenizer_name = "model/embding/BAAI/bge-base-zh-v1.5",

16 model_name = "model/Qwen/Qwen1___5-7B-Chat",

17 device_map = "auto", #"auto","balanced","balanced_low_0","sequential"

18 model_kwargs = {

19 "trust_remote_code":True,

20 "quantization_config": quantization_config

21 }

22 )

File d:\AIConda\env\tcm-ai-rag\lib\site-packages\llama_index\llms\huggingface\base.py:212, in HuggingFaceLLM.__init__(self, context_window, max_new_tokens, query_wrapper_prompt, tokenizer_name, model_name, model, tokenizer, device_map, stopping_ids, tokenizer_kwargs, tokenizer_outputs_to_remove, model_kwargs, generate_kwargs, is_chat_model, callback_manager, system_prompt, messages_to_prompt, completion_to_prompt, pydantic_program_mode, output_parser)

210 """Initialize params."""

211 model_kwargs = model_kwargs or {}

--> 212 model = model or AutoModelForCausalLM.from_pretrained(

213 model_name, device_map=device_map, **model_kwargs

214 )

216 # check context_window

217 config_dict = model.config.to_dict()

File d:\AIConda\env\tcm-ai-rag\lib\site-packages\transformers\models\auto\auto_factory.py:600, in _BaseAutoModelClass.from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

598 if model_class.config_class == config.sub_configs.get("text_config", None):

599 config = config.get_text_config()

--> 600 return model_class.from_pretrained(

601 pretrained_model_name_or_path, *model_args, config=config, **hub_kwargs, **kwargs

602 )

603 raise ValueError(

604 f"Unrecognized configuration class {config.__class__} for this kind of AutoModel: {cls.__name__}.\n"

605 f"Model type should be one of {', '.join(c.__name__ for c in cls._model_mapping)}."

606 )

File d:\AIConda\env\tcm-ai-rag\lib\site-packages\transformers\modeling_utils.py:317, in restore_default_torch_dtype.<locals>._wrapper(*args, **kwargs)

315 old_dtype = torch.get_default_dtype()

316 try:

--> 317 return func(*args, **kwargs)

318 finally:

319 torch.set_default_dtype(old_dtype)

File d:\AIConda\env\tcm-ai-rag\lib\site-packages\transformers\modeling_utils.py:4887, in PreTrainedModel.from_pretrained(cls, pretrained_model_name_or_path, config, cache_dir, ignore_mismatched_sizes, force_download, local_files_only, token, revision, use_safetensors, weights_only, *model_args, **kwargs)

4884 hf_quantizer = None

4886 if hf_quantizer is not None:

-> 4887 hf_quantizer.validate_environment(

4888 torch_dtype=torch_dtype,

4889 from_tf=from_tf,

4890 from_flax=from_flax,

4891 device_map=device_map,

4892 weights_only=weights_only,

4893 )

4894 torch_dtype = hf_quantizer.update_torch_dtype(torch_dtype)

4895 device_map = hf_quantizer.update_device_map(device_map)

File d:\AIConda\env\tcm-ai-rag\lib\site-packages\transformers\quantizers\quantizer_bnb_4bit.py:76, in Bnb4BitHfQuantizer.validate_environment(self, *args, **kwargs)

72 raise ImportError(

73 f"Using `bitsandbytes` 4-bit quantization requires Accelerate: `pip install 'accelerate>={ACCELERATE_MIN_VERSION}'`"

74 )

75 if not is_bitsandbytes_available(check_library_only=True):

---> 76 raise ImportError(

77 "Using `bitsandbytes` 4-bit quantization requires the latest version of bitsandbytes: `pip install -U bitsandbytes`"

78 )

79 if not is_torch_available():

80 raise ImportError(

81 "The bitsandbytes library requires PyTorch but it was not found in your environment. "

82 "You can install it with `pip install torch`."

83 )

ImportError: Using `bitsandbytes` 4-bit quantization requires the latest version of bitsandbytes: `pip install -U bitsandbytes`